CARAMEL presents its results on the field of autonomous and connected vehicles

The H2020 CARAMEL (Artificial Intelligence-based Cybersecurity for Connected and Automated Vehicles) project, coordinated by i2CAT, aims at identifying appropriate responses to cybersecurity threats and vulnerabilities in the context of Cooperative, Connected and Automated Mobility (CCAM).

The project focuses on four pillars: autonomous mobility, connected mobility, electromobility and remote controlling; where each of them introduces different scenarios. Specifically and respectively, these refer to techniques that alter the environment identified by the car, alter its GPS location, interfere with the charging stations available for the car or with remote operations. In order to identify (and possibly mitigate) the cyber attacks, the project uses AI/ML-based techniques, mostly on Neural Networks (NNs), along with the usage of Tensorflow Lite models and the Keras API to implement them. Some examples of NNs in use are the Convolutional Neural Networks (CNNs), whilst some techniques in use are the Auto Encoder (AE) and the Wasserstein Generative Adversarial Network (WGAN).

On June 21st, the final form of the outcomes of the project were demonstrated. The demonstration took place at Panasonic Automotive’s facilities in Langen (Hessen) in Germany. The first half of the day consisted of a technical presentation of the developments on the first two pillars (autonomous and connected mobility), which were later presented during the second part of the day live, with a vehicle in motion in the area, or through simulations (using the CARLA simulator).

The live demonstration topics correspond to the cyberthreat detection and response techniques (by Panasonic), the V2X interoperability (by i2CAT), the revocation of certificates (by Atos), the OBU HW anti tampering (by Nextium) and the CARAMEL backend (by Capgemini). Figure 1 features the car in motion, used for the live demonstration.

Later, the simulated demonstrations displayed the results of the research and integrations in mitigating cyber attacks on autonomous vehicles, specifically on the (i) presentation of the remote control vehicle activities with the intrusion detection and estimation algorithm in the Gateway & RCV controller; and then on (ii) pillar 1 (autonomous mobility simulations), demonstrating the Location Spoofing attack (by AVL), a robust scene analysis and understanding via multimodal fusion (by UPAT), DriveGuard countering camera attacks against autonomous vehicles (by UCY) and traffic sign tampering detection and mitigation (by 0Inf); on (iii) pillar 2 (connected mobility), with attacks on the V2X Message Transmission (by i2CAT), OBU HW anti tampering (by Nextium), certificate revocation (by Atos), collaborative GPS Spoofing (by UPAT), holistic Situational Awareness with ML application; and on (iv) pillar 3 (electromobility), with the remote detection of cyber attacks on EV Charge Stations from cloud back office (by Greenflux).

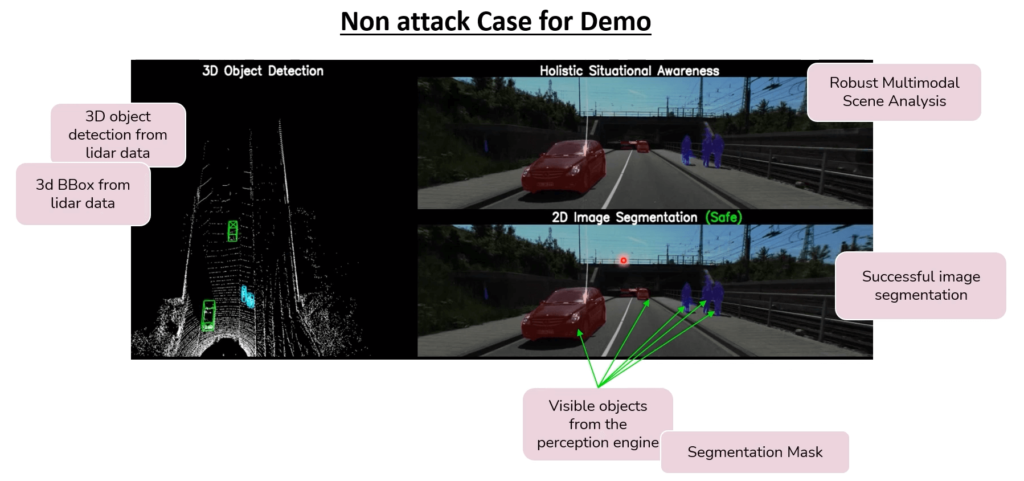

As an example of the demonstrations in pillar 2, the holistic Situational Awareness with ML application presented a base scenario that uses the data obtained from the sensors, both from Light Detection and Ranging o Laser Imaging Detection and Ranging (LiDAR) and the vehicle’s camera; then the system first correctly classifies the vehicles (in green) or pedestrians and bicycles (in blue). Figure 2 shows how each kind of image is applied the appropriate colour for each segmentation mask.

However, the classification procedure may fail when under attack; thus producing failed image segmentation (i.e., stopping correct identification), as shown in Figure 3. A multimodal fusion technique is applied to fuse LiDAR and camera sensors to provide a robust scene analysis, able to identify whether the situation is safe or under attack and applying a set of techniques to correct the anomalies if in the latter case.

The H2020 CARAMEL project serves as an example of the application of AI and ML techniques that complement the knowledge of the environment and help improve the results obtained by a given proposed system. Open-VERSO applies some similar techniques (e.g., CNNs) to classify radio signals or to optimise the dimension of the mobile networks according to their usage.